The “GPT” AI models, developed by OpenAI, are advanced large language models (LLMs) capable of understanding and generating human-like text, offering significant potential for municipal governments in Northwestern Ontario. These models excel in tasks such as drafting policy documents, responding to citizen inquiries, and summarizing large volumes of text data, making them versatile tools for various administrative and communication purposes. While they provide opportunities for enhancing efficiency, improving citizen engagement, and ensuring cost-effectiveness, it’s crucial to consider their limitations, including potential biases and ethical concerns related to AI implementation in public sectors. The successful integration of GPT models into municipal functions also involves addressing challenges such as infrastructure development, staff training, and public transparency. As these models continuously evolve, they present an opportunity for municipalities to leverage the latest technological advancements in governance.

This study explores the challenges of AI adoption in German municipalities from the perspective of employees. It extends the Technology-Organization-Environment (TOE) framework by identifying perceived challenges in adopting AI in the public sector.

Authors: Cindy Schaefer, K. Lemmer, Kret Samy Kret, Maija Ylinen, Patrick Mikalef, Björn Niehavesm dolor sit amet, consectetuer adipiscing elit, sed diam nonummy nibh euismod tincidunt ut laoreet dolore magna aliquam erat volutpat.

This research compares the discursive constructions of AI policies between North America and East Asia. It uses corpus data for quantitative study and adopts a dialectical-relational approach to analyze the results, revealing differences in priorities and applications of AI between these regions.

Authors: Feng Gu

This article explores the impact of AI use in the public sector on core governance functions. A review of 250 cases across the European Union shows that AI is mainly used to improve public service delivery, enhance internal management, and assist in policy decision-making. The study highlights the need for in-depth investigation to understand the role and impact of AI in governance.

Authors: C. V. Noordt, Gianluca Misuraca

This paper examines the role of AI in smart city development, focusing on public services. It discusses the evolution of smart cities and the involvement of Internet giants in public service platforms. The study emphasizes the need for pilot smart cities that link AI technology with public services for sustainable development and explores how AI technology can enhance urban public services.

Authors: Jin Zhou

Authors: Jin Zhou

This research examines current attempts at AI governance and addresses common concerns, including a case study on Amazon’s Alexa and a survey of U.S. public opinion on AI. It highlights key areas for governing bodies to address regarding AI’s ethical and security issues.

Authors: Adora Ndrejaj, Maaruf Ali

Research on GPT models is a continuously evolving field, with ongoing advancements aimed at improving their accuracy, efficiency, and applicability. Scientists and engineers are consistently working to address challenges like bias reduction and context understanding in these models. This constant development ensures that GPT models remain at the forefront of AI technology, expanding their potential uses and capabilities.

ChatGPT is an AI chatbot created by OpenAI, using technology that allows it to converse like a human. For municipal governments, it can be a useful tool for automating responses to common public inquiries, aiding in drafting documents, or providing quick information. It’s trained on a large amount of text, so it understands a variety of topics, but it doesn’t replace the need for up-to-date, local knowledge. ChatGPT can help streamline some administrative tasks, making communication more efficient in a municipal setting.

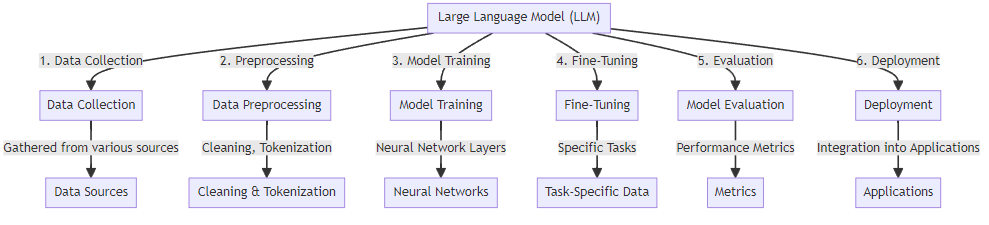

Try ChatGPTThis diagram outlines the key components and processes involved in the development and functioning of a Large Language Model (LLM). It includes stages such as data collection, preprocessing, model training, fine-tuning, evaluation, and deployment. Each stage plays a critical role in ensuring the effectiveness and accuracy of the LLM. The diagram also shows the specific tasks and elements involved in each stage, such as data sources, cleaning and tokenization, neural networks, task-specific data, performance metrics, and application integration.

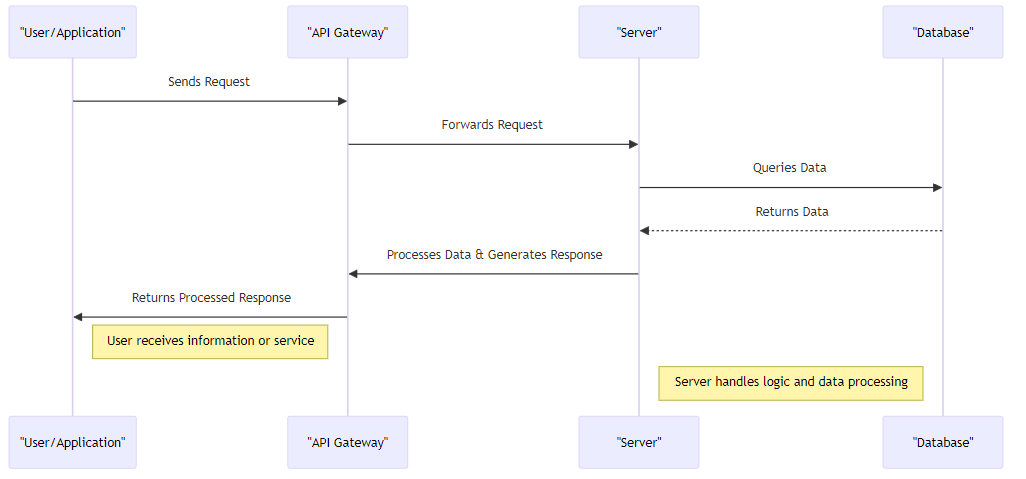

An application programming interface is a way for two or more computer programs to communicate with each other. It is a type of software interface, offering a service to other pieces of software.

In this diagram, the process begins with a user or an application sending a request to the API Gateway. The API then forwards this request to a server. The server processes the request, which often involves querying a database for data. Once the database returns the necessary data, the server processes this data and generates a response. This response is then sent back to the application.

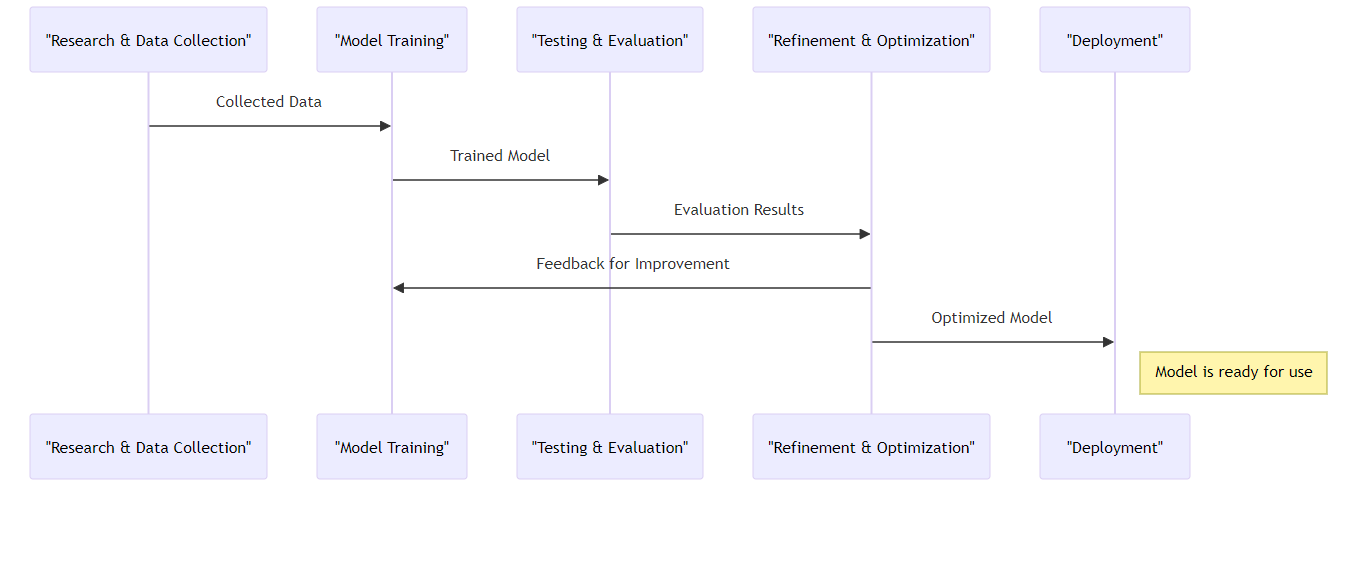

Training a Large Language Model (LLM) involves a complex and resource-intensive process called deep learning, specifically using a variant known as transformer models. Initially, the model is fed a colossal dataset comprising text from a wide range of sources, like books, articles, websites, and other written materials, to cover diverse topics and styles. This training data helps the model learn language patterns, grammar, semantics, and contextual nuances. During training, the model uses algorithms to predict the next word in a sentence, gradually refining its predictions based on feedback loops (a process known as backpropagation). The model’s parameters, potentially numbering in the billions, are continuously adjusted to minimize the difference between its predictions and the actual data. Over time, through this iterative process, the LLM learns to generate coherent, contextually appropriate text, enabling it to understand and respond to human language with a high degree of accuracy.

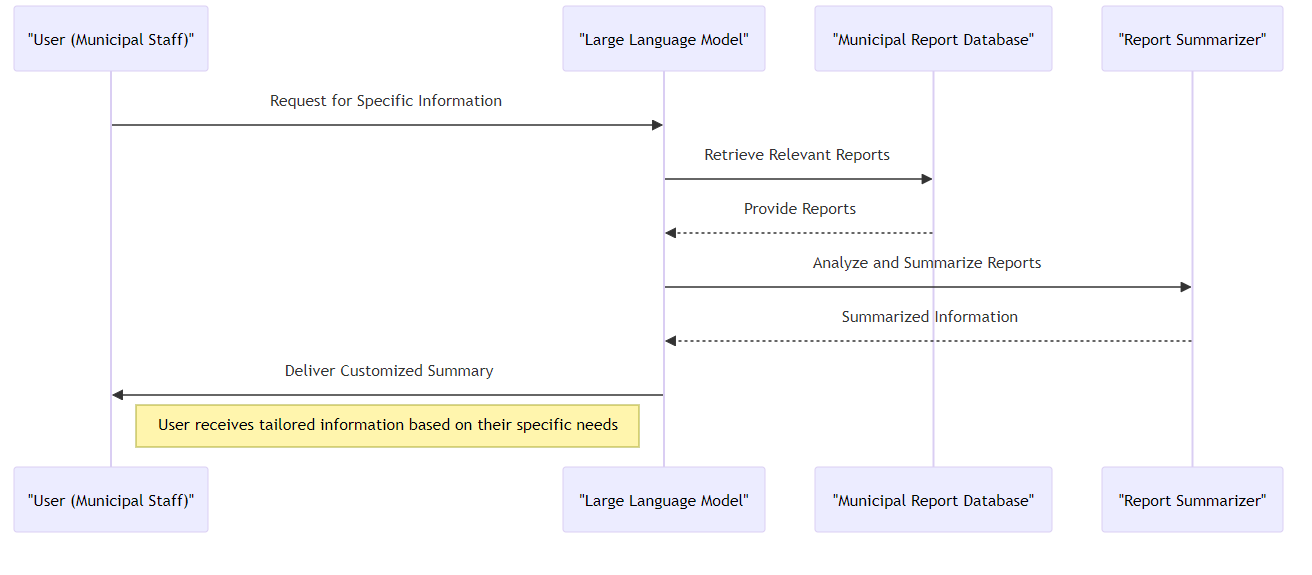

This diagram shows the process starting from the user’s request for specific information, through the LLM’s interaction with the municipal report database, to the summarization of reports and the delivery of customized summaries back to the user. It highlights the role of the LLM in facilitating efficient and tailored access to information for municipal staff.

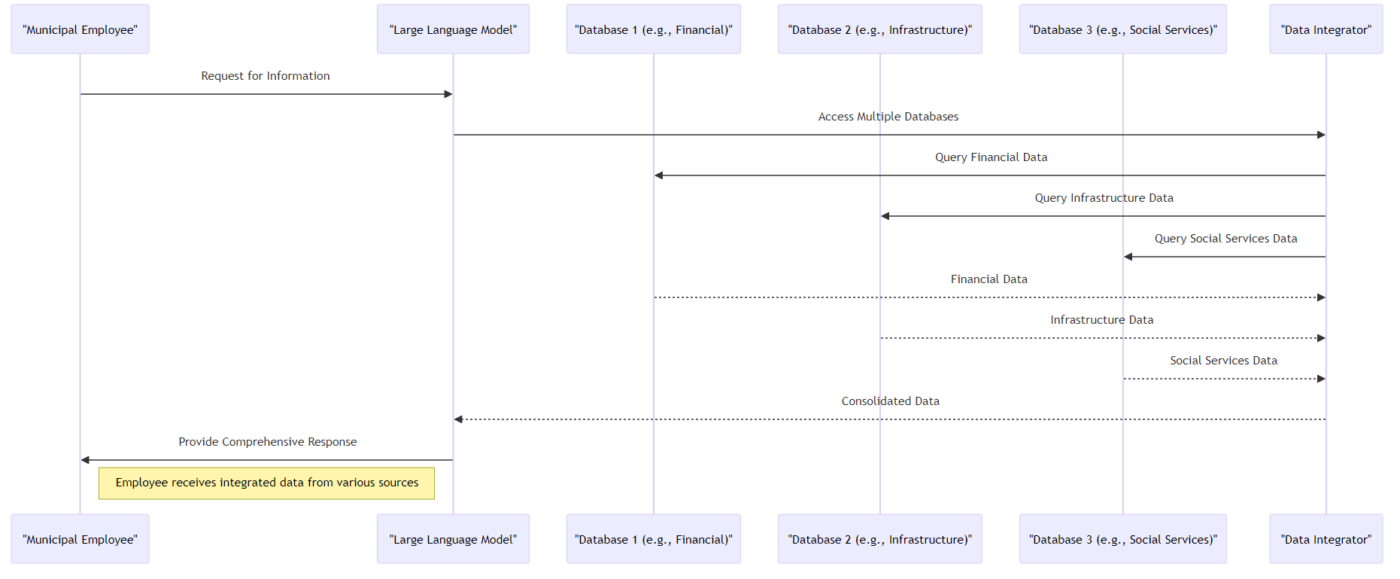

This diagram shows the interaction between a municipal employee and the LLM, which accesses multiple databases (such as financial, infrastructure, and social services) through a data integrator. The LLM then provides a comprehensive response to the employee, integrating data from these various sources. This process enables the employee to receive a consolidated view of information across different municipal sectors.

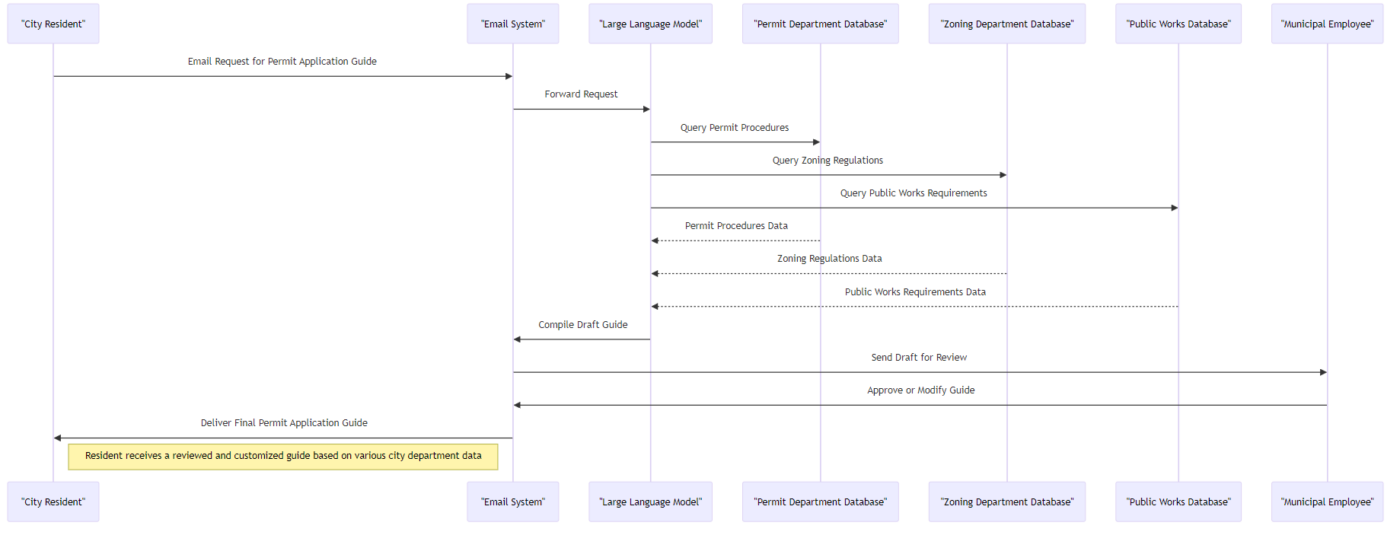

This diagram shows the process starting from the city resident’s email request, through the email system forwarding the request to the LLM, to the LLM querying various department databases (like the Permit Department, Zoning Department, and Public Works). The LLM then compiles the information into a guide and sends it back to the resident via the email system. This process enables the resident to receive a comprehensive and customized guide on applying for a permit.

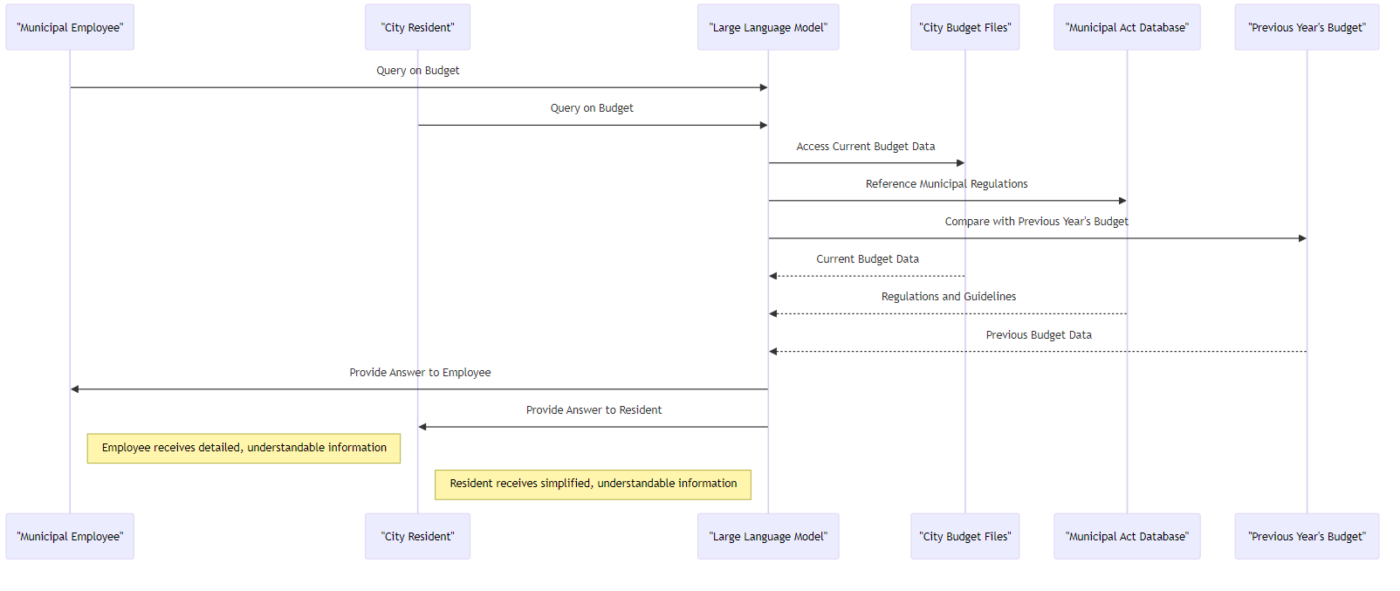

In this diagram, both a municipal employee and a city resident submit queries about the budget to the LLM. The LLM then accesses the current city budget files, the Municipal Act database, and data from the previous year’s budget. After processing this information, the LLM provides answers to both the employee and the resident. The key aspect here is that the LLM tailors the complexity and detail of the information to suit the understanding level of the employee and the resident, ensuring that both parties receive understandable and relevant information.

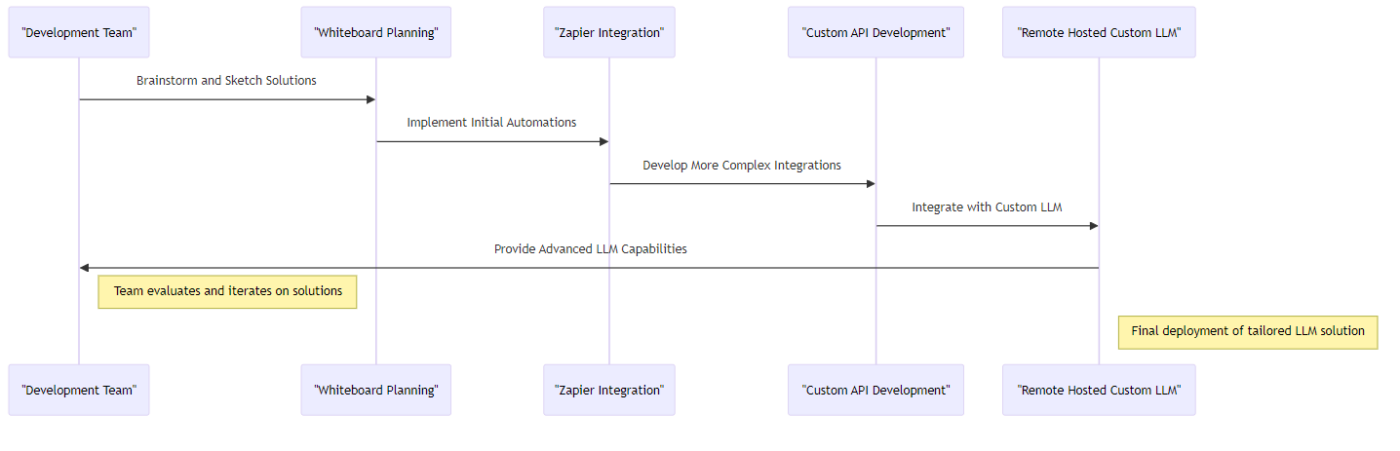

development process begins with the team brainstorming and sketching solutions on a whiteboard. This initial phase involves creative planning and outlining the basic structure of the solution. Next, the team implements initial automations using Zapier, which allows for the integration of various applications and services without the need for complex coding.

As the project progresses, the team moves on to develop more complex integrations through custom API development. This step involves creating APIs that can handle specific tasks and workflows required by the municipality.

Finally, the process culminates with the integration of these solutions with a custom Large Language Model (LLM) that is remotely hosted. This LLM provides advanced capabilities tailored to the municipality’s needs, such as processing large volumes of data, understanding natural language queries, and generating insightful responses.

Throughout this process, the team continuously evaluates and iterates on the solutions to ensure they meet the specific requirements and goals of the municipality. The final deployment of the tailored LLM solution marks the completion of this development journey.